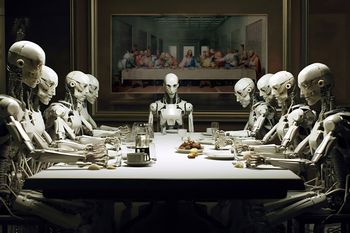

Sam Harris speaks with Eliezer Yudkowsky and Nate Soares about their new book, If Anyone Builds It, Everyone Dies: The Case Against Superintelligent AI. They discuss the alignment problem, ChatGPT and recent advances in AI, the Turing Test, the possibility of AI developing survival instincts, hallucinations and deception in LLMs, why many prominent voices in tech remain skeptical of the dangers of superintelligent AI, the timeline for superintelligence, real-world consequences of current AI systems, the imaginary line between the internet and reality, why Eliezer and Nate believe superintelligent AI would necessarily end humanity, how we might avoid an AI-driven catastrophe, the Fermi paradox, and other topics.

Eliezer Yudkowsky is a founding researcher in the field of AI alignment and the co-founder of the Machine Intelligence Research Institute. With influential work spanning more than twenty years, Yudkowsky has played a major role in shaping the public conversation about smarter-than-human AI. He appeared on Time magazine's 2023 list of the 100 Most Influential People in AI, and has been discussed or interviewed in The New Yorker, Newsweek, Forbes, Wired, Bloomberg, The Atlantic, The Economist, the Washington Post, and other outlets.

Website: https://ifanyonebuildsit.com/

X: @ESYudkowsky

Nate Soares is the president of the Machine Intelligence Research Institute. He has worked in the AI alignment field for over a decade, and previously held positions at Microsoft and Google. Soares is the author of a substantial body of technical and semi-technical writing on AI alignment, including foundational work on value learning, decision theory, and power-seeking incentives in smarter-than-human AIs.

Website: https://ifanyonebuildsit.com/

X: @So8res